ZJU-Leaper: A Benchmark Dataset for Fabric Defect Detection

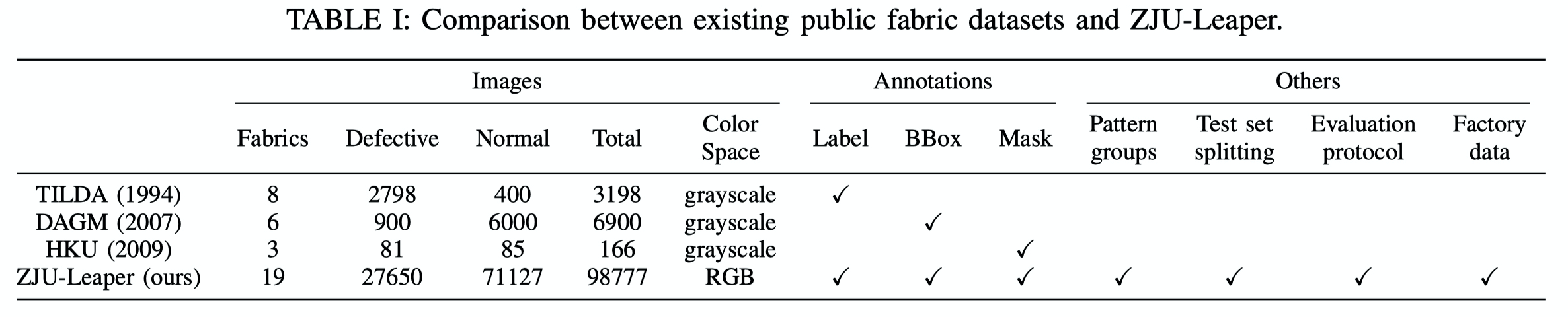

Datasets Comparison

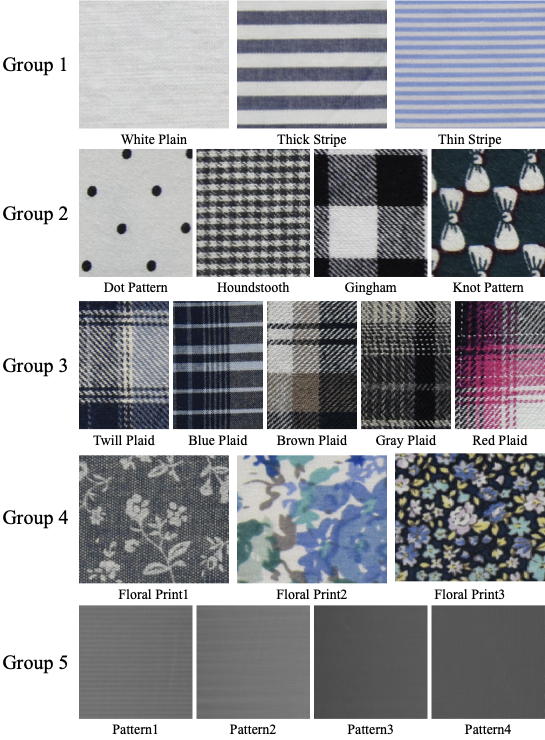

Fabric Patterns

Dataset Statistics

Fabric Groups

- Group 1: White Plain, Thick Stripe, Thin Stripe;

- Group 2: Dot Pattern, Houndstooth, Gingham, Knot Pattern;

- Group 3: Twill Plaid, Blue Plaid, Brown Plaid, Gray Plaid, Red Plaid;

- Group 4: Floral Print 1, Floral Print 2, Floral Print 3;

- Group 5: Pattern 1, Pattern 2, Pattern 3, Pattern 4;

Task Settings

- Setting 1: Normal samples only (annotation-free) — when images of defect-free fabrics can be obtained cheaply, and those of defects are difficult to acquire.

- Setting 2: Small amount of defect data (with mask annotation) — when a small amount of defect data and annotation information can be obtained at a low cost, along with many normal images.

- Setting 3: A large amount of defect data (with label annotation) — when methods for the first two settings fail to train the inspection model effectively, and labeling information of defects can be quickly obtained.

- Setting 4: A large amount of defect data (with bounding-box annotation) — more labor is involved in marking defects with bounding boxes that contain rough position and size information of defects.

- Setting 5: A large amount of defect data (with mask annotation) — used as the last resort where the effort to mask all defects in a large number of samples becomes necessary.

Evaluation Metrics

- Pixel-level

- Region-level

- Sample-level

Other Resources

- Dataset download link

- Codes of baseline experiments

- Implementation of existing methods

Related Paper

The paper titled "ZJU-Leaper: A Benchmark Dataset for Fabric Defect Detection and a Comparative Study" was accepted by IEEE Transactions on Artificial Intelligence.

Citation Tip

@article{zju-leaper,

author={Zhang, Chenkai and Feng, Shaozhe and Wang, Xulongqi and Wang, Yueming},

journal={IEEE Transactions on Artificial Intelligence},

title={ZJU-Leaper: A Benchmark Dataset for Fabric Defect Detection and a Comparative Study},

year={2020},

volume={1},

number={3},

pages={219-232},

doi={10.1109/TAI.2021.3057027}

issn={2691-4581},

publisher={IEEE},

}

Performance Benchmark

Baseline models

| Model | Setting | F1_pix | F1_reg | F1_sam | Note |

|---|---|---|---|---|---|

| SC | Setting1 | 0.169 | 0.107 | 0.468 | Sparse coding |

| CAE | Setting1 | 0.187 | 0.103 | 0.432 | Convolutional auto-encoder |

| OCSVM | Setting1 | 0.118 | 0.071 | 0.469 | One-class SVM |

| U-Net | Setting2 | 0.558 | 0.299 | 0.711 | U-Net model |

| U-Net(TL) | Setting2 | 0.603 | 0.424 | 0.739 | U-Net with transfer learning |

| U-Net(DA) | Setting2 | 0.588 | 0.335 | 0.779 | U-Net with data augmentation |

| U-Net(label) | Setting3 | 0.224 | 0.113 | 0.587 | Training with label annotations |

| U-Net(bbox) | Setting4 | 0.683 | 0.538 | 0.84 | Training with bounding-box annotations |

| U-Net | Setting5 | 0.906 | 0.724 | 0.856 | Training with all mask annotations, costly |